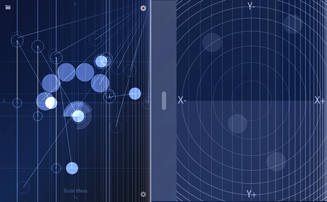

The next update to TC-Data is done, and that means turning my attention back to TC-11. It looks like I’ll be pushing out a quick update just for 1.8.x Audiobus support for iOS 8. As a palate cleanser (post-testing monotony), I’m playing with camera brightness. Here are some thoughts about bringing a new sensor into an app.

The fun is definitely front-loaded. Research the video framework, load up a camera in a test app, and get some images to appear on screen. What part of the video are we going to use as a controller? I think there are two good candidates: brightness and motion.

I’m familiar with brightness calculating from Processing (all the way back to Scanner I). The challenge with iOS is, frankly, finding the least gunky way to get the data needed. So I Google some examples of brightness following and found one that works.

I start with low quality video to save on processing power, since we’re not going to see images from the camera. But that’s a bust because the frame rate is slow, and I do care about that.

Brightness following is go!

A quick look at Instruments shows I’m chugging as I create and destroy image data just to get the brightness. So all that code gets chucked. As it turns out (as some photographers may have known all along) that there is a BrightnessValue entry in the camera’s EXIF dictionary. Free data! I’ll take it when I can.

Like I mentioned, the fun is all at the beginning. Dropping this into the TC apps will take a lot of testing. Brightness values depend on the camera settings, and this should be able to react consistently in both high and low-light conditions. The stream has to start / stop at the correct times in the app lifecycle, the controller drawing needs to stick to the side of the screen that has the camera, etc.. But it’ll be there soon.